I'm a Doctoral Researcher at the Computational Social Science (CSS) Department at GESIS - Leibniz Institute for the Social Sciences. I'm fortunate to be advised by Prof. Claudia Wagner and Jun Prof. Gabirella Lapesa. My work is located at the intersection of NLP and social science. I am particularly interested in researching Fear Speech 👻.

Before GESIS, I was a research intern in Prof. Rajesh Sharma‘s Computational Social Science Group, where I worked on Hate Speech. If you'd like to chat with me, feel free to schedule a meeting through my Microsoft Calendar appointment link

Click for a surprise! 🤗

News

- Oct 2024 New Pre-print - "ProvocationProbe: Instigating Hate Speech Dataset from Twitter"

- Apr 2024 New Pre-print - "Analyzing Toxicity in Deep Conversations: A Reddit Case Study"

- Feb 2024 Our paper got accepted to the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024)

- Feb 2024 I will be joining the Computational Social Science department at GESIS starting in March 2024

- Aug 2023 Our paper got accepted to the 32nd ACM International Conference on Information and Knowledge Management (CIKM 2023)

Publications

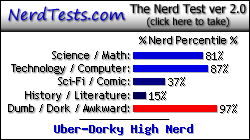

ProvocationProbe: Instigating Hate Speech Dataset from Twitter

Abhay Kumar, Vigneshwaran Shankaran, Rajesh Sharma

Preprint

PaperIn the recent years online social media platforms has been flooded with hateful remarks such as racism, sexism, homophobia etc. As a result, there have been many measures taken by various social media platforms to mitigate the spread of hate-speech over the internet. One particular concept within the domain of hate speech is instigating hate, which involves provoking hatred against a particular community, race, colour, gender, religion or ethnicity. In this work, we introduce \textit{ProvocationProbe} - a dataset designed to explore what distinguishes instigating hate speech from general hate speech. For this study, we collected around twenty thousand tweets from Twitter, encompassing a total of nine global controversies. These controversies span various themes including racism, politics, and religion. In this paper, i) we present an annotated dataset after comprehensive examination of all the controversies, ii) we also highlight the difference between hate speech and instigating hate speech by identifying distinguishing features, such as targeted identity attacks and reasons for hate.

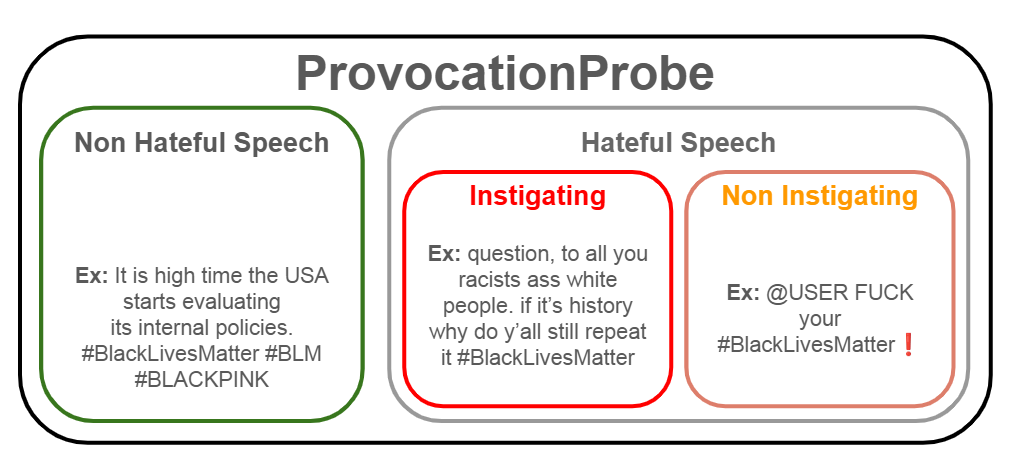

Analyzing Toxicity in Deep Conversations: A Reddit Case Study

Vigneshwaran Shankaran, Rajesh Sharma

Preprint

Paper DataOnline social media has become increasingly popular in recent years due to its ease of access and ability to connect with others. One of social media's main draws is its anonymity, allowing users to share their thoughts and opinions without fear of judgment or retribution. This anonymity has also made social media prone to harmful content, which requires moderation to ensure responsible and productive use. Several methods using artificial intelligence have been employed to detect harmful content. However, conversation and contextual analysis of hate speech are still understudied. Most promising works only analyze a single text at a time rather than the conversation supporting it. In this work, we employ a tree-based approach to understand how users behave concerning toxicity in public conversation settings. To this end, we collect both the posts and the comment sections of the top 100 posts from 8 Reddit communities that allow profanity, totaling over 1 million responses. We find that toxic comments increase the likelihood of subsequent toxic comments being produced in online conversations. Our analysis also shows that immediate context plays a vital role in shaping a response rather than the original post. We also study the effect of consensual profanity and observe overlapping similarities with non-consensual profanity in terms of user behavior and patterns.

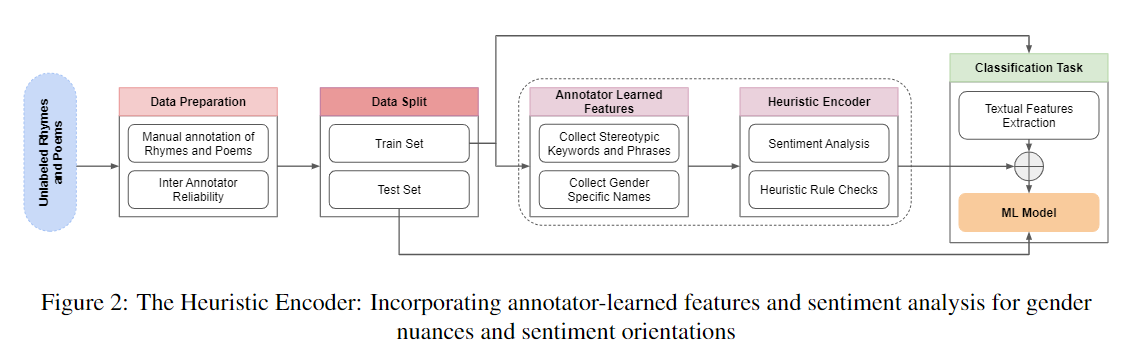

Revisiting The Classics: A Study on Identifying and Rectifying Gender Stereotypes in Rhymes and Poems

Aditya Narayan Sankaran*, Vigneshwaran Shankaran*, Sampath Lonka, Rajesh Sharma

LREC-COLING'24

Paper DataRhymes and poems are a powerful medium for transmitting cultural norms and societal roles. However, the pervasive existence of gender stereotypes in these works perpetuates biased perceptions and limits the scope of individuals' identities. Past works have shown that stereotyping and prejudice emerge in early childhood, and developmental research on causal mechanisms is critical for understanding and controlling stereotyping and prejudice. This work contributes by gathering a dataset of rhymes and poems to identify gender stereotypes. We then propose a model with 97\% accuracy to address gender bias. Gender stereotypes were rectified using a Large Language Model (LLM) and human educators along with a survey comparing their effectiveness. The findings highlight the pervasive nature of gender stereotypes in literary works and reveal the potential of LLMs in rectifying gender stereotypes and encourage further research in this area. This study raises awareness and promotes inclusivity within artistic expressions, making a significant contribution to the discourse on gender equality.

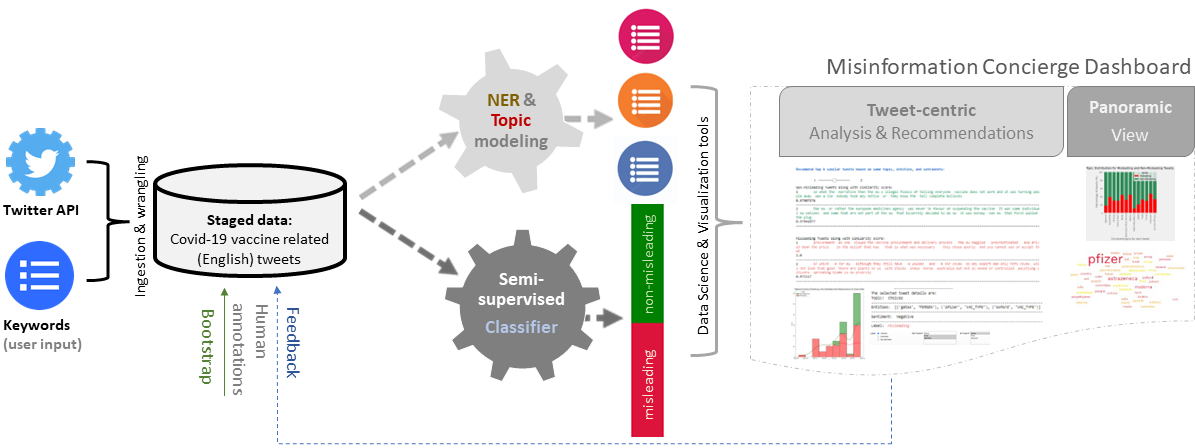

Misinformation Concierge: A proof-of-concept with curated Twitter dataset on COVID-19 vaccination

Shakshi Sharma, Anwittaman Datta, Vigneshwaran Shankaran, Rajesh Sharma

CIKM'23 Demo track

PaperWe demonstrate the Misinformation Concierge, a powerful tool that provides actionable intelligence on misinformation prevalent in social media. Specifically, it uses language processing and machine learning tools to identify subtopics of discourse and discerns non/misleading posts; presents statistical reports for policy-makers to understand the big picture of prevalent misinformation in a timely manner; and recommends rebuttal messages for specific pieces of misinformation, identified from within the corpus of data - providing means to intervene and counter misinformation promptly. The Misinformation Concierge proof-of-concept using a curated dataset is accessible at: https://demo-frontend-uy34.onrender.com/

Miscellaneous

- Romberg. Julia, Shankaran. Vigneshwaran, & Maurer. Maximilian (2025). Adapters: Lightweight Machine Learning for Social Science Research. GESIS Training https://bsky.app/profile/gesistraining.bsky.social/post/3lplst3bdxc2a.

- Breuer. Johannes, Soldner. Felix, & Shankaran. Vigneshwaran (2024). European Parliament Election 2024: German Candidates Social Media Activities. GESIS, Cologne. ZA8917 Data file Version 1.0.0, https://doi.org/10.4232/1.14455.

- Sub-Reviewer of the 2023 IEEE/ACM International Conference on Advances in Social Network Analysis and Mining.

- Master Thesis Supervision - Toxicity in Google Play Store Reviews: What, Where and Why? - 2022/3

- Co-organizer of the Computational Social Science(CSS) Workshop, Institute of Computer Science, University of Tartu 2022.

- Teaching Assistant - MTAT.03.319 Business Data Analytics, University of Tartu - Fall 22 & Spring 23. Lectures are available here (Access available to UT Students).